Data Services

We prepare production-grade datasets for computer vision, natural language, medical imaging and audio/video models — from precise ground truth labeling to robust synthetic augmentation and reliable classification.

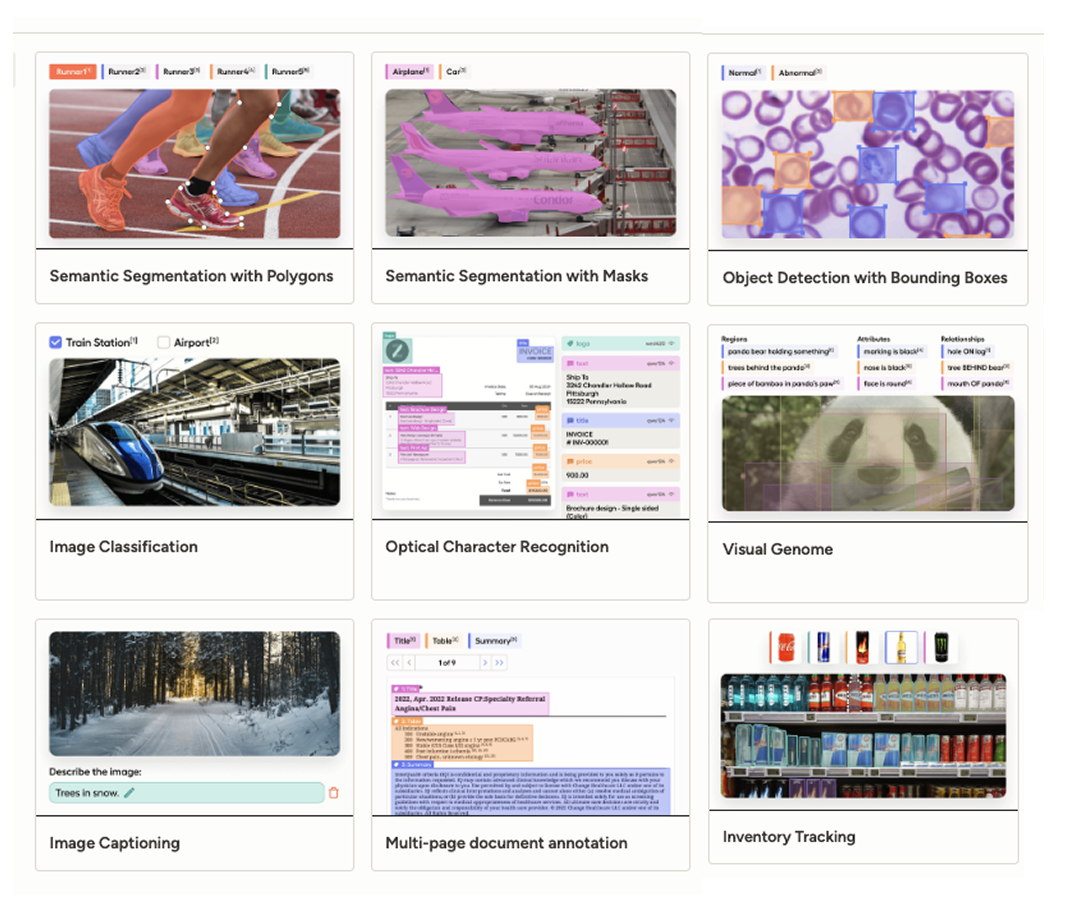

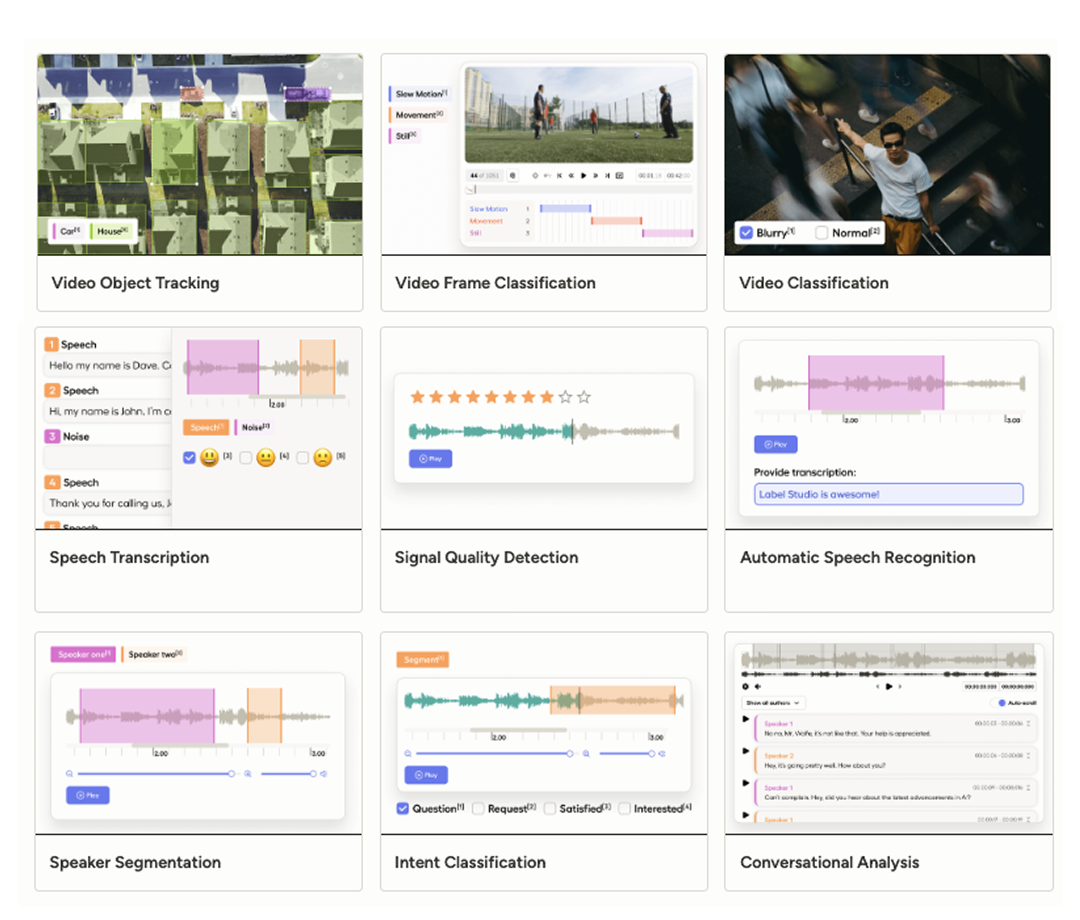

Data Labeling & Annotation

The comprehensive process of identifying input data (labeling) and marking specific features within it (annotation) to establish ground truth for training and validation.

To Train Computer Vision (Object Detection)

We draw bounding boxes, polygons, or key points around objects to teach models how to navigate the physical world.

Use Case: Autonomous Driving systems recognizing pedestrians, lanes, and traffic signs.

To Enable Natural Language Processing (NLP)

We tag text for entities (names, places, dates) or intent to help machines understand human speech patterns.

Use Case: AI Chatbots extracting dates or order numbers from support queries.

To Facilitate Medical Diagnostics

We perform precise pixel-level segmentation to identify anomalies in high-resolution imagery.

Use Case: Radiology AI identifying tumor shape and size in CT scans.

Data Classification

Organizing and categorizing files or data points into a predefined taxonomy to enable search, moderation, and analytics at scale.

To Automate Content Moderation

We categorize user-generated content against safety guidelines to instantly filter harmful material.

Use Case: Platforms automatically flagging NSFW or violent images before publishing.

To Structure E‑Commerce Inventories

We sort products into granular categories to ensure search and filters deliver relevant results.

Use Case: Tagging items as Clothing → Mens → Outerwear → Waterproof.

To Analyze Sentiment & Feedback

We classify text by emotion or urgency to prioritize responses and measure public opinion.

Use Case: Classifying thousands of tweets as Positive, Negative, or Neutral.

Data Augmentation

Artificially expanding and enhancing datasets (synthetic examples, transformations, noise injection) to improve model performance and fairness.

To Solve Data Scarcity

We create synthetic variations (flip, rotate, zoom) when real-world data is rare or expensive.

Use Case: Rare disease research expanding MRI datasets.

To Improve Model Robustness

We inject noise, blur, and lighting changes so models perform well in messy real-world conditions.

Use Case: FaceID working reliably in low light or rain.

To Eliminate Dataset Bias

We synthesize under-represented classes to balance datasets and reduce biased model behavior.

Use Case: Generating synthetic fraud transaction patterns to balance a dataset.